Previously I have shown how to upload WordPress backups to Dropbox without a WordPress backup plugin. If your site isn’t that large than Dropbox’s free limit of 2 GB may suit your needs. However, if you have a larger site then Google Drive may be a better option since you get 15 GB of space on free accounts.

Thanks to the program gdrive it is possible to upload directly to your Google Drive via the command line. This means we can use the power of WP-CLI to backup our WordPress database along with tar much faster than WordPress plugins in PHP like UpdraftPlus. The script is hosted on github.

You will need shell access to your VPS or dedicated server like Digital Ocean to install the gdrive software.

Automatically Back up WordPress to Google Drive with Bash Script

Installation overview

- Install gdrive so we can upload files to Google Drive

- Test gdrive before we create the backup script

- Create the Google Drive backup script and schedule it

Install gdrive

On 64 bit Linux for Ubuntu, Debian or CentOS this will install the latest gdrive. If you need other versions or CPU Architectures see here.

wget "https://docs.google.com/uc?id=0B3X9GlR6EmbnQ0FtZmJJUXEyRTA&export=download" -O /usr/bin/gdriveIf you want to run gdrive as a regular user you can change the ownership of the program to that user.

sudo chown user /usr/bin/gdriveMake sure the gdrive allows its owner to read, write and execute (7)

sudo chmod 755 /usr/bin/gdriveNow you can start the initial setup wizard by executing

gdrive listYou will get a URL that you need to follow in your browser.

Go to the following url in your browser:

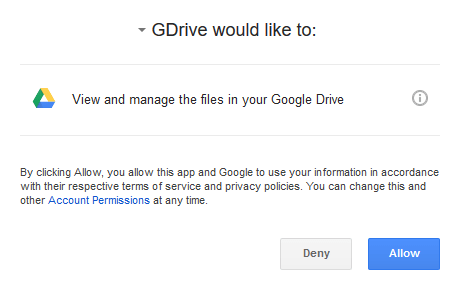

https://accounts.google.com/o/oauth2/auth?access_type=offline&client_id=367226221053-7n0vf5akeru7on6o2fjinrecpdoe99eg.apps.googleusercontent.com&redirect_uri=urn%3Aietf%3Awg%3Aoauth%3A2.0%3Aoob&response_type=code&scope=https%3A%2F%2Fwww.googleapis.com%2Fauth%2Fdrive&state=stateYou will get a popup to Allow GDrive and then be shown a verification code.

Enter your verification code from above and you will shown a list of the items on your Google Drive.

Enter verification code: 4/v641ZwmsTHGD9x8k3qXyp066x7PbOsL5ID0tgFyODFE

Id Name Type Size Created

0B-zYdZ8Kfs5Zc3RhcnRlcl9maWxl Getting started bin 1.6 MB 2016-08-16 00:44:19Test gdrive

Let’s create a backups folder

gdrive mkdir "backups"You will get the ID of the directory which is necessary for uploading items into that folder.

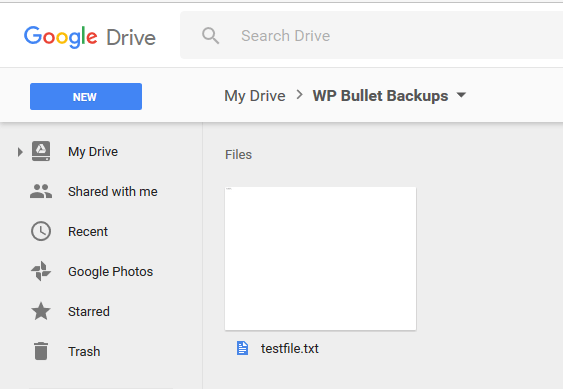

Directory 0B-zYdZ8Kfs5ZQ0ZVUGtsRlEtT2M createdYou can check on your Google Drive that the folder exists.

We are going to create a temporary file to test uploading with

cd /tmp

echo "test" > testfile.txtSpecify the folder ID of our backups folder we created a few steps ago, the --delete means we delete the local copy of the file.

gdrive upload --parent 0B-zYdZ8Kfs5ZQ0ZVUGtsRlEtT2M --delete /tmp/testfile.txtget folder ID

Uploading /tmp/testfile.txt

Uploaded 0B-zYdZ8Kfs5ZbXRrbG9GXzBoSU0 at 5.0 B/s, total 5.0 B

Removed /tmp/testfile.txtYou can check your Google Drive to see if the file ended up there.

This will get folder ID of the testfile. All files and folders have a folder ID so you can reference them in scripts for deletion.

I will be using this technique in the Google Drive WordPress backup bash script.

gdrive list --no-header | grep testfile | awk '{ print $1}'Now it is time to create the Google Drive Backup Script for WordPress

Create Google Drive Backup Script for WordPress

Now we are going to create the bash script that will use gdrive, tar and WP-CLI

mkdir ~/scripts

nano ~/scripts/googledrivebackup.shThis is the script, it runs in sequence to back up each WordPress installation and upload it to Google Drive.

In the DAYSKEEP variable you can specify how many days of WordPress backups you would like to keep, any older ones will be deleted from Google Drive.

#!/usr/bin/env bash

# Source: https://guides.wp-bullet.com

# Author: Mike

#define local path for backups

BACKUPPATH="/tmp/backups"

#define remote backup path

BACKUPPATHREM="WP-Bullet-Backups"

#path to WordPress installations, no trailing slash

SITESTORE="/var/www"

#date prefix

DATEFORM=$(date +"%Y-%m-%d")

#Days to retain

DAYSKEEP=7

#calculate days as filename prefix

DAYSKEPT=$(date +"%Y-%m-%d" -d "-$DAYSKEEP days")

#create array of sites based on folder names

SITELIST=($(ls -d $SITESTORE/* | awk -F '/' '{print $NF}'))

#make sure the backup folder exists

mkdir -p $BACKUPPATH

#check remote backup folder exists on gdrive

BACKUPSID=$(gdrive list --no-header | grep $BACKUPPATHREM | grep dir | awk '{ print $1}')

if [ -z "$BACKUPSID" ]; then

gdrive mkdir $BACKUPPATHREM

BACKUPSID=$(gdrive list --no-header | grep $BACKUPPATHREM | grep dir | awk '{ print $1}')

fi

#start the loop

for SITE in ${SITELIST[@]}; do

#delete old backup, get folder id and delete if exists

OLDBACKUP=$(gdrive list --no-header | grep $DAYSKEPT-$SITE | grep dir | awk '{ print $1}')

if [ ! -z "$OLDBACKUP" ]; then

gdrive delete $OLDBACKUP

fi

# create the local backup folder if it doesn't exist

if [ ! -e $BACKUPPATH/$SITE ]; then

mkdir $BACKUPPATH/$SITE

fi

#enter the WordPress folder

cd $SITESTORE/$SITE

#back up the WordPress folder

tar -czf $BACKUPPATH/$SITENAME/$SITE/$DATEFORM-$SITE.tar.gz .

#back up the WordPress database, compress and clean up

wp db export $BACKUPPATH/$SITE/$DATEFORM-$SITE.sql --all-tablespaces --single-transaction --quick --lock-tables=false --allow-root --skip-themes --skip-plugins

cat $BACKUPPATH/$SITE/$DATEFORM-$SITE.sql | gzip > $BACKUPPATH/$SITE/$DATEFORM-$SITE.sql.gz

rm $BACKUPPATH/$SITE/$DATEFORM-$SITE.sql

#get current folder ID

SITEFOLDERID=$(gdrive list --no-header | grep $SITE | grep dir | awk '{ print $1}')

#create folder if doesn't exist

if [ -z "$SITEFOLDERID" ]; then

gdrive mkdir --parent $BACKUPSID $SITE

SITEFOLDERID=$(gdrive list --no-header | grep $SITE | grep dir | awk '{ print $1}')

fi

#upload WordPress tar

gdrive upload --parent $SITEFOLDERID --delete $BACKUPPATH/$SITE/$DATEFORM-$SITE.tar.gz

#upload wordpress database

gdrive upload --parent $SITEFOLDERID --delete $BACKUPPATH/$SITE/$DATEFORM-$SITE.sql.gz

done

#Fix permissions

sudo chown -R www-data:www-data $SITESTORE

sudo find $SITESTORE -type f -exec chmod 644 {} +

sudo find $SITESTORE -type d -exec chmod 755 {} +Ctrl+X, Y and Enter to Save and exit.

Now add the script as a cronjob so it runs every day

crontab -eEnter this so the script runs daily (meaning midnight), you may want to use absolute paths to the script (e.g. /home/user/scripts or /root/scripts)

@daily /bin/bash ~/scripts/googledrivebackup.shCtrl+X, Y and Enter to save and exit.

Now your WordPress or WooCommerce backups will automatically be uploaded to Google Drive without having to rely on a WordPress plugin.

This already loops through all of your sites if they follow a logical structure :). There is no need to make a separate script for each installation.

I’m unable to replicate this issue, it’s working for me after copying and pasting into nano.

Try changing this variable BACKUPPATHREM=”WP-Bullet-Backups”

thanks, it worked; however I got the:

Error: This does not seem to be a WordPress install.

my domains are under

/home/nginx/domains/domain.com/public

I enter in the

SITESTORE=”/home/nginx/domains/”

what is the best for that?

—-

also got

chown: invalid user: ‘www-data:www-data’

Looks like you are not on Ubuntu or Debian, are using custom repositories or another distro so you’ll need to find out the user your web server software runs as.

hello

why we need this –skip-themes –skip-plugins

I tried to restore the backup, but I got almost empty wordpress, why u want to exclude themes and plugins?

That is just to prevent any plugin or theme errors from preventing the wp cli command to execute, the whole database and WordPress folder is backed up so if you are missing anything in WordPress it is probably your database prefix set incorrectly or something like that.

thanks, that helped it was db prefix, can we automate this, like putting a code to read the prefix from the old install and put it insdie the sql file name, so that when restorning that we know the prefix, or maybe a code with search and replace after the restore of files?

This is something you should be considering when you create fresh WP installs for restoring backups to, personally I don’t bother changing the db prefix as I have seen no evidence of any security it offers.

I got this message

512.0 B/253.1 MBFailed to upload file: googleapi: Error 403: Rate Limit Exceeded, rateLimitExceeded

I was not doing too many requests

It’s unlikely Google would throttle you for no reason, if many requests are coming from the same IP address Google will rate limit you

also, sometime db-backup fails by the cron please see https://www.dropbox.com/s/vlmfcjbiqckvxvu/Screenshot%202017-04-15%2013.09.09.png?dl=0

is there any log/debug for that>?

you can add > /tmp/dropbox.log 2> /tmp/dropbox.log to the end of the crontab line and you should get some error logs that way.

I got this

Success: Exported to ‘/tmp/backups/domain.com/2017-04-15-domain.com.sql’.

No valid arguments given, use ‘gdrive help’ to see available commands

Failed to get file: googleapi: Error 404: File not found: –delete., notFound

Failed to get file: googleapi: Error 404: File not found: –delete., notFound

I highly recommend getting the script to work manually first before adding it as a cronjob, those errors show the modifications you may have made are not working so the gdrive command fails. Run the script manually many times for a few days to get it working properly.

this is the manual run error codes

Try dismantling the script and run things line by line to see where they go wrong. Unfortunately I do not have the time to debug where you are going wrong but you are welcome to hire me for troubleshooting.

Amazing tutorial. Thanks!!!

Thanks for sharing Yi!

Thank you for the amazing tutorial. Is it possible to exclude the “cache” folder in “wp-content”, mine is 13GB pretty big and doesnt need to be backed up. Thanks.

Hey Duc!

My pleasure, glad you liked it :).

The tar command has an

--excludeflag so for this lineYou can probably add

https://unix.stackexchange.com/questions/32845/tar-exclude-doesnt-exclude-why

Thank you for your help, after many tries, this works for me:

tar --exclude='./wp-content/cache' -czf $BACKUPPATH/$SITENAME/$SITE/$DATEFORM-$SITE.tar.gz .